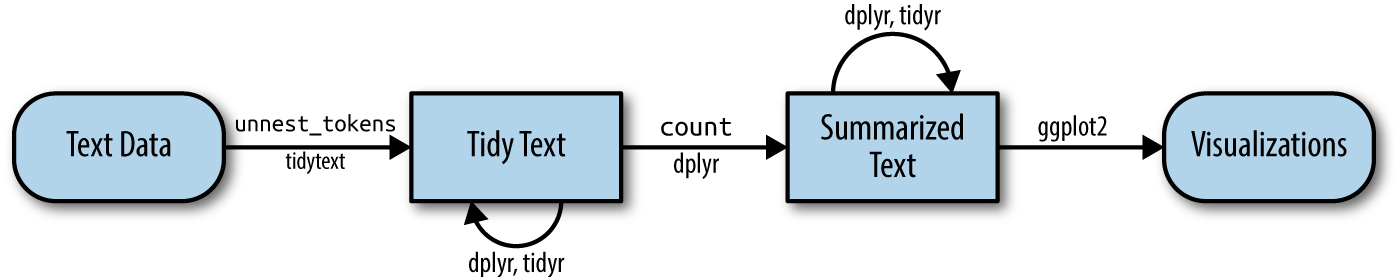

class: center, middle, inverse, title-slide .title[ # Text analysis: <br /> topic modeling and sentiment analysis ] .author[ ### MACSS 30500 <br /> University of Chicago ] --- # Agenda * Topic Modeling * LDA * Sentiment Analysis * Positive / negative sentiment * Application: TS! * Future applications: * Author prediction --- class: inverse, middle # Topic Modeling --- # Topic Modeling Method to organize and understand large collections of textual information (without reading them). How? -- By **finding groups of words ("topics") that go together.** Words that co-occur more frequently that chance alone would predict are assigned to the same topic. Example: organizing 1.2M books into 2000 topics see David Mimno [slides here](https://mimno.infosci.cornell.edu/slides/details.pdf) and [video here](https://vimeo.com/53080123). --- # Topic Modeling **Word Frequency** looks at the exact recurrence of each word in a corpus (using a simple count or a weighted count). Instead: **Topic Modeling** looks at how groups of words co-occur together (using probability). -- Intuition: The meaning of words is dependent upon the broader context in which they are used. --- # Topic Modeling A topic is a set of words that are associated with one another, showing an underlying common theme that is semantically interpretable by humans. A topic is similar to what a human would call a theme or a discourse: whenever a specific discourse is made, some words tend to come up more frequently than others. The goal of TM is to identify the discourses that characterize a collection of documents. --- # Topic Modeling One way to think about how TM… Imagine working through an article with a set of highlighters. As you read through the article: * you use a different color for the key words of themes within the article as you encounter them * when you were done, you could take all words of the same color and create separate lists (one per each topic) --- # Topic Modeling <img src="tm.png" width="90%" style="display: block; margin: auto;" /> --- # Topic Modeling: LDA TM is the name of a family of algorithms. The most common is **LDA or Latent Dirichlet Allocation**. This model assumes that: 1. every document in a corpus contains a mixture of topics that are found throughout the entire corpus 1. each topic is made of a (limited) mixture of characteristic words, which tend to occur together whenever the topic is displayed The topic structure is hidden ("latent"): we can only observe the documents and words, not the topics themselves; the goal is to infer such topics by the words and documents (more formally: topic modeling computes the conditional posterior distribution of latent variables, e.g. topics, given the observed variables, e.g. words in documents). --- # Topic Modeling: LDA **LDA works as follows:** 1. The researcher begins by setting an arbitrary number of topics (`k`) for the whole collection of documents. 1. The algorithm (Gibbs sampling) creates, simultaneously: * a first randomly chosen word-topic probability: distribution of topics over all observed words in the collection * a first randomly chosen document-topic probability: distribution of topics by document 1. Through reiterative attempts, the algorithm adjusts these initial distributions to provide a set of probabilities for every word-topic pair and for every document-topic pair. --- # Topic Modeling: LDA **LDA produces two types of output:** 1. words most frequently associated with each of the `k` topics specified by the researcher 1. documents most frequently associated with each of the `k` topics (the researcher defines a probability threshold) --- # Topic Modeling: LDA simple example We start with a simple example, then move to a real example in R. Imagine we have a corpus with the following five documents (each document is one sentence): **Document 1** I ate a banana and spinach smoothie for breakfast. **Document 2** I like to eat broccoli and bananas. **Document 3** Puppies and kittens are cute. **Document 4** My sister adopted a kitten yesterday. **Document 5** This cute hamster is eating a piece of broccoli. --- # Topic Modeling: LDA simple example **First, we need to transform these textual data in the appropriate form needed for LDA:** * input: * raw data * output: * create a vocabulary (remove stop words, lowercase, tokenize, etc.) * create a document-term matrix **Then, we run LDA:** * input: * the document-term matrix as input * the number of topics we want to generate (we decide them) * output: * the word-topic and document-topic probabilities --- # Topic Modeling: LDA simple example If we give to LDA the document-term matrix from these 5 documents, and we ask for 2 topics, LDA might produce something like: **Topic A:** 30% eat, 20% broccoli, 15% bananas, 10% breakfast, … **Topic B:** 20% dog, 20% kitten, 20% cute, 15% hamster, … -- **Document 1 and 2** 100% Topic A (we can label it "food") **Document 3 and 4**: 100% Topic B (we can label it "cute animals") **Document 5:** 60% Topic A, 40% Topic B --- # Topic Modeling: LDA example in R Download today's class materials to access the code. (`usethis::use_course("CFSS-MACSS/text-analysis-fundamentals-and-sentiment-analysis-and-tm")`) We have additional resources you can practice with as well! **Examples from the book**: Chapter 6, and the three case-study chapters + Chapter 2 to reshape the data into the appropriate format for LDA --- class: inverse, middle, center  --- # A thorough examination of Taylor Swift Lyrics -- Recall the following graphic:  -- We will move through this process using [this dataset of Taylor Swift albums and lyrics from Kaggle](https://www.kaggle.com/datasets/ishikajohari/taylor-swift-all-lyrics-30-albums?resource=download) --- ## Basic workflow for text analysis We can think at the basic workflow as a 4-step process: 1. Obtain your textual data: **Kaggle, check!** 1. Data cleaning and pre-processing **OOF THIS WAS A PAIN** *see end of slides for more detail* 1. Data transformation **building on last class's materials** 1. Perform analysis **the fun part** --- # Steps: Data cleaning and pre-processing (see: data/swift_data_raw) To find the data, I googled things like, 'taylor swift lyrics dataset' before finding the dataset on kaggle. I then spot-checked some of the albums and songs to be sure that things looked generally OK. (remember, once you use someone else's data, it becomes your problem!) I pulled all this in and tried to use specific code and packages that we've used in class as much as possible so you could see where the pieces came from. Without belaboring this too much, you can see in today's files where I have the raw data I imported and cleaned, and how I timmed out unwanted characteristics / elements, e.g. [Chorus]. --- # Steps: Data cleaning and pre-processing (see: data/swift_data_raw) Here is a snippet of what the raw structure was: `data > albums > 1989 > song.txt` -- Here is what the raw txt file looked like: ``` r strwrap(read_lines(here("..", "text-analysis-fundamentals-and-sentiment-analysis-and-tm", "data", "swift_data_raw", "Albums", "1989", "BlankSpace.txt")), 80) ## [1] "237 ContributorsTranslationsTürkçeEspañolPortuguêsPolskiΕλληνικάFrançaisBlank" ## [2] "Space Lyrics[Verse 1]" ## [3] "Nice to meet you, where you been?" ## [4] "I could show you incredible things" ## [5] "Magic, madness, heaven, sin" ## [6] "Saw you there and I thought" ## [7] "\"Oh, my God, look at that face" ## [8] "You look like my next mistake" ## [9] "Love's a game, wanna play?\" Ayy" ## [10] "New money, suit and tie" ## [11] "I can read you like a magazine" ## [12] "Ain't it funny? Rumors fly" ## [13] "And I know you heard about me" ## [14] "So, hey, let's be friends" ## [15] "I'm dying to see how this one ends" ## [16] "Grab your passport and my hand" ## [17] "I can make the bad guys good for a weekend" ## [18] "[Chorus]" ## [19] "So, it's gonna be forever" ## [20] "Or it's gonna go down in flames?" ## [21] "You can tell me when it's over, mm" ## [22] "If the high was worth the pain" ## [23] "Got a long list of ex-lovers" ## [24] "They'll tell you I'm insane" ## [25] "'Cause you know I love the players" ## [26] "And you love the game" ## [27] "'Cause we're young and we're reckless" ## [28] "We'll take this way too far" ## [29] "It'll leave you breathless, mm" ## [30] "Or with a nasty scar" ## [31] "Got a long list of ex-lovers" ## [32] "They'll tell you I'm insane" ## [33] "But I've got a blank space, baby" ## [34] "And I'll write your name" ## [35] "" ## [36] "[Verse 2]" ## [37] "Cherry lips, crystal skies" ## [38] "I could show you incredible things" ## [39] "Stolen kisses, pretty lies" ## [40] "You're the king, baby, I'm your queen" ## [41] "Find out what you want" ## [42] "Be that girl for a month" ## [43] "Wait, the worst is yet to come, oh, no" ## [44] "Screaming, crying, perfect storms" ## [45] "I can make all the tables turn" ## [46] "Rose garden filled with thorns" ## [47] "Keep you second guessing, like" ## [48] "\"Oh, my God, who is she?\"" ## [49] "I get drunk on jealousy" ## [50] "But you'll come back each time you leave" ## [51] "'Cause, darling, I'm a nightmare dressed like a daydream" ## [52] "You might also like[Chorus]" ## [53] "So, it's gonna be forever" ## [54] "Or it's gonna go down in flames?" ## [55] "You can tell me when it's over, mm" ## [56] "If the high was worth the pain" ## [57] "Got a long list of ex-lovers" ## [58] "They'll tell you I'm insane" ## [59] "'Cause you know I love the players" ## [60] "And you love the game" ## [61] "'Cause we're young and we're reckless (Oh)" ## [62] "We'll take this way too far" ## [63] "It'll leave you breathless (Oh-oh), mm" ## [64] "Or with a nasty scar" ## [65] "Got a long list of ex-lovers" ## [66] "They'll tell you I'm insane (Insane)" ## [67] "But I've got a blank space, baby" ## [68] "And I'll write your name" ## [69] "" ## [70] "[Bridge]" ## [71] "Boys only want love if it's torture" ## [72] "Don't say I didn't, say I didn't warn ya" ## [73] "Boys only want love if it's torture" ## [74] "Don't say I didn't, say I didn't warn ya" ## [75] "" ## [76] "[Chorus]" ## [77] "So, it's gonna be forever" ## [78] "Or it's gonna go down in flames?" ## [79] "You can tell me when it's over (Over), mm" ## [80] "If the high was worth the pain" ## [81] "Got a long list of ex-lovers" ## [82] "They'll tell you I'm insane (I'm insane)" ## [83] "'Cause you know I love the players" ## [84] "And you love the game (And you love the game)" ## [85] "'Cause we're young and we're reckless (Yeah)" ## [86] "We'll take this way too far (Ooh)" ## [87] "It'll leave you breathless, mm" ## [88] "Or with a nasty scar (With a nasty scar)" ## [89] "Got a long list of ex-lovers" ## [90] "They'll tell you I'm insane" ## [91] "But I've got a blank space, baby" ## [92] "And I'll write your name1KEmbed" ``` --- # Steps: Cleaning (see: data/swift_data_raw) Obviously, there's a lot of work that was needed here. I did a rough clean -- for publication, I would probably do another pass or two and do some more spot checks. (I like to use a random number generator to select a row of my dataframe to verify that everything is good / looks OK). -- **Steps**: 1. Generate list of file paths (note I had to loop through albums to get songs). (HW05 was helpful for this) 1. Extract album title * Get title from path string * Add spaces back in where needed 1. Extract song title from file path * Get title from path string * Add spaces back in where needed 1. Read in data: * Read lines from path * Remove the top line * Get rid of any chunks about song structure 1. Merge data (Album, Song, Lyrics) and export as data frame --- # Steps: Data transformation * Remove stop words (sort of interesting, what do you do about songs like, 'the 1'?). * Perform analysis on entire corpus and by album: * Frequency of words (histogram and wordcloud) * Sentiment of words (positive / negative) * Emotional score for text and albums * Consider future steps: "eras" and "eras tour"??? --- # Steps: Perform analysis on entire corpus vs albums For this, we want to explore the most common words used by Taylor Swift overall. We can then consider how we might want to break things out in future anlaysis. *(Note, this was a rough clean, so we have some words that don't fully make sense in here).* -- .panelset[ .panel[.panel-name[Overall Analysis] <div class="figure"> <img src="../../img/ts_frequent_words.png" alt="Histogram of most frequent words in Taylor Swift songs" width="30%" /> <p class="caption">Histogram of most frequent words in Taylor Swift songs</p> </div> ] .panel[.panel-name[Wordcloud] <div class="figure"> <img src="../../img/ts_wordcloud.png" alt="Wordcloud of most frequent tokens in Taylor Swift songs" width="50%" /> <p class="caption">Wordcloud of most frequent tokens in Taylor Swift songs</p> </div> ] .panel[.panel-name[Wordcloud: Pos/Neg] <div class="figure"> <img src="../../img/ts_wordcloud_pos_neg.png" alt="Most frequent Positive (dark gray) and Negative (light gray) tokens in Taylor Swift Lyrics" width="40%" /> <p class="caption">Most frequent Positive (dark gray) and Negative (light gray) tokens in Taylor Swift Lyrics</p> </div> ] .panel[.panel-name[Analysis by Album] <div class="figure"> <img src="../../img/ts_frequent_words_album.png" alt="Histogram of most frequent words in Taylor Swift songs by album" width="50%" /> <p class="caption">Histogram of most frequent words in Taylor Swift songs by album</p> </div> ] ] --- # Steps: Visualizations (Wordcloud) Here, we can explore the word cloud generated from the text. THESE ARE NOT FOR ANALYSIS. YES THEY ARE COOL. NO THEY ARE NOT ANALYSIS!!! -- <div class="figure"> <img src="../../img/ts_wordcloud.png" alt="Word cloud of popular tokens" width="60%" /> <p class="caption">Word cloud of popular tokens</p> </div> --- ## Trajectory: Thinking about word use <div class="figure"> <img src="../../img/ts_frequent_words_album.png" alt="Histogram of most frequent words in Taylor Swift songs by album" width="70%" /> <p class="caption">Histogram of most frequent words in Taylor Swift songs by album</p> </div> Across each album, we can get a sense of a trajectory: time and love seem to feature in quite frequently, while we see a shift from 'girl' in her early albums to 'woman' in later ones. We can dig into this a bit more deeply as we look at the sentiment of the words. --- ## Trajectory: Thinking about word use (tf-idf) When calculating tf-idf, we're looking for words that are more common relative to the rest of the corpus. We can think of this as an 'album signature' in our case. Here, we can see that we get a collection of different words than when we just look at the most frequent words. <div class="figure"> <img src="../../img/ts_idf_words.png" alt="Histogram of most frequent words in Taylor Swift songs by album" width="70%" /> <p class="caption">Histogram of most frequent words in Taylor Swift songs by album</p> </div> Across each album, we can get a sense of a trajectory: time and love seem to feature in quite frequently, while we see a shift from 'girl' in her early albums to 'woman' in later ones. We can dig into this a bit more deeply as we look at the sentiment of the words. --- # (reminder / review) LDA: TOPICS LDA (Laten Dirichlet Allocation) is one method for fitting a topic model. It offers a means of *unsupervised* classification of a collection of documents. (I think of it as you dump a bunch of items into a pile and it sorts them for you based on patterns it finds...although the same item could appear in multiple piles). Small / simple example: suppose you have a list of a bunch of animals that you want grouped. You give it the following: dog, cat, parakeet, tiger, polar bear, lion, penguin. If you tell the model you want three topics, it might provide the following groups: * Topic 1: Dog, cat, parakeet * Topic 2: Parakeet, penguin, polar bear * Topic 3: Cat, tiger, lion -- It's up to you, the researcher, to determine what meaning or value lies within these topics / allocations. You may also determine how many topics you would like (more topics can mean a slower model). If you had said two topics, you might have gotten the following instead: * Topic 1: Dog, cat, parakeet * Topic 2: Penguin, polar bear, tiger, lion Notice that the 'sense' you make of the topics might be different in the two situations. --- # LDA: Two topic model Let's start with a simple two-topic model (we'll get more complicated soon!) <div class="figure"> <img src="../../img/ts_frequent_words_topics.png" alt="Frequent words in a two-topic model" width="50%" /> <p class="caption">Frequent words in a two-topic model</p> </div> -- We can think about what we might 'call' these topics and how we might want to explore this further. (Again, note this is unsupervised!) --- # LDA: understanding the right number of topics Let's try that again, but now with different numbers of topics. I have a visualization that is a little different from the text because I felt like it helped visualize the results better. We could do boxplots, but those were a bit messy for this particular dataset. -- .panelset[ .panel[.panel-name[Two topics] <div class="figure"> <img src="../../img/ts_2topics.png" alt="Text plot of songs, colored by albums (two topics)" width="40%" /> <p class="caption">Text plot of songs, colored by albums (two topics)</p> </div> ] .panel[.panel-name[Three topics] <div class="figure"> <img src="../../img/ts_3topics.png" alt="Text plot of songs, colored by albums (three topics)" width="40%" /> <p class="caption">Text plot of songs, colored by albums (three topics)</p> </div> ] .panel[.panel-name[Ten topics!] <div class="figure"> <img src="../../img/ts_10topics.png" alt="Text plot of songs, colored by albums (ten topics)" width="40%" /> <p class="caption">Text plot of songs, colored by albums (ten topics)</p> </div> ] ] --- # Reflection: I think two topics makes sense here, of our options. However, I would be interested in pooling albums and reassessing to see. Alternatively, we might want to think about other ways to explore the meaning / distinctions. --- # Topic modeling: pros/cons * Topic models **account for "multiplexity":** a given document is unlikely to fall precisely and fully into a single topic * Topic models **do not care about the order of words** “dog eats food" is the same as "food eats dog" * To determine the "right" number of topics: no fixed rule, it is a try-error process, ultimately **the researcher decides** (there are some metrics, such as perplexity and coherence score but are beyond our goals) * Topics are **unlabeled:** they are just a bunch of words, it is up to the researcher to read, interpret, and label them * Topic models are **no substitute for human interpretation of a text:** they are a way of making educated guesses about how words cohere into different latent themes by identifying patterns in the way they co-occur within documents. Many people are somewhat disappointed when they discover their model produces uninformative results --- # LDAvis LDAvis is a an interactive visualization of LDA model results https://github.com/cpsievert/LDAvis 1. What is the meaning of each topic? 1. How prevalent is each topic? 1. How do the topics relate to each other? --- class: inverse, middle # Sentiment Analysis --- # Sentiment Analysis Use of NLP and programming to **study emotional states and subjective information in a text**. In practice sentiment analysis is usually applied: * to determine the polarity of a text: whether a text is positive, negative, or neutral * to detect specific feelings and emotions: angry, sad, happy, etc. * examples: analyzing costumer feedback, classify movies review Different from Topic Modeling, Sentiment Analysis it is usually supervised (e.g., needs a labeled input to compare our text to) --- # Sentiment Analysis: our approach Our approach to sentiment analysis (consistent with the book, Chapter 2): * consider the text a combination of its individual words, and * consider the sentiment content of the text as the sum of the sentiment content of the individual words Notice: "This isn’t the only way to approach sentiment analysis, but it is an often-used approach, and an approach that naturally takes advantage of the tidy tool ecosystem." --- # The `sentiments` datasets The `syzuhet` package provides access to several sentiment/emotion lexicons: + **AFINN** from Finn Årup Nielsen (words classified with on a scale from -5 to +5) + **bing** from Bing Liu and collaborators (words classified into binary categories: negative and positive) + **nrc** from Saif Mohammad and Peter Turney (words classified in multiple categories) See: https://www.tidytextmining.com/sentiment.html#the-sentiments-datasets --- # The `sentiments` datasets These dictionaries constitute our gold standard (e.g., our labeled sentiments): we use them to classify our texts. Dictionary-based methods like these find the total sentiment of a piece of text by adding up the individual sentiment scores for each word in the text. --- # Sentiment Analysis: Examples **Examples from the book**: Chapter 2, and the three case-study chapters **Another example (in today's class-materials)**: sentiment analysis of text from The Office **Another example (in today's class-materials)**: sentiment analysis of Taylor Swift albums --- class: middle, inverse, center # Back to TS  --- # Steps: Analysis -- Sentiment Here, we can look at the sentiment within her work: specifically, what are the positive and negative words used, both overall and by album? -- .panelset[ .panel[.panel-name[Overall Analysis] <div class="figure"> <img src="../../img/ts_sentiment.png" alt="Histogram of positive and negative sentiment in Taylor Swift songs" width="40%" /> <p class="caption">Histogram of positive and negative sentiment in Taylor Swift songs</p> </div> ] .panel[.panel-name[Analysis by Album: Positive] <div class="figure"> <img src="../../img/ts_positive_words_album.png" alt="Histogram of positive sentiment in Taylor Swift songs by album" width="40%" /> <p class="caption">Histogram of positive sentiment in Taylor Swift songs by album</p> </div> ] .panel[.panel-name[Analysis by Album: Negative] <div class="figure"> <img src="../../img/ts_negative_words_album.png" alt="Histogram of negative sentiment in Taylor Swift songs by album" width="40%" /> <p class="caption">Histogram of negative sentiment in Taylor Swift songs by album</p> </div> ] ] --- # Steps: Analysis (Negative Sentiment) Again, here some expertise / background on Taylor Swift can be helpful to make sense of our analysis. We can see that her early work has less 'intense' (generally) language and the negative sentiment features words like, 'crazy,' 'sad,' and 'bad'. With Reputation, we see a shift (this makes sense given the album and its role in her discography). Here, we see words like 'hate' come in and, as we move into more recent albums, words like 'death,' 'shit,' 'kill.' <div class="figure"> <img src="../../img/ts_negative_words_album.png" alt="Histogram of negative words by album" width="45%" /> <p class="caption">Histogram of negative words by album</p> </div> --- # Steps: Analysis (Negative Sentiment) With positive words, we see a lot of consistency ('like') and while there are some more 'innocent' words appearing with more frequency in earlier albums, we don't see as much change / evolution as we did with the negative words. If anything, the language has gotten more direct in the later work (which, particularly thinking about 1989 and Midnights, makes sense). -- <div class="figure"> <img src="../../img/ts_positive_words_album.png" alt="Histogram of postive words used by album" width="45%" /> <p class="caption">Histogram of postive words used by album</p> </div> --- # Steps: Visualizations -- <div class="figure"> <img src="../../img/ts_sentiment_album.png" alt="Emotional arc of TS albums" width="40%" /> <p class="caption">Emotional arc of TS albums</p> </div> --- class: inverse, middle, center  --- # Recap: * There are methods for analyzing text and getting a sense of the common ideas and topics that emerge * Unsupervised (easier), supervised (need training data of some kind) can provide techniques to offer deeper insights * Sentiment analysis looks at overall valence of words * Lots of room for researcher to interpret: can be difficult to do well and needs some expertise to evaluate. --- class: inverse # Acknowledgements The content of these slides is derived in part from Sabrina Nardin and Benjamin Soltoff’s “Computing for the Social Sciences” course materials, licensed under the CC BY NC 4.0 Creative Commons License. I developed the Taylor Swift example using data from [Kaggle](https://www.kaggle.com/datasets/ishikajohari/taylor-swift-all-lyrics-30-albums/versions/12?resource=download) and building on code from [Text Mining in R](https://www.tidytextmining.com/). Any errors or oversights are mine alone. <!-- <!-- # THANK YOU ALL FOR A GREAT CLASS! I have genuinely enjoyed getting to know you and your work and hope you keep in touch regarding how you use R in the future! --> <!-- <!-- We have one assignment remaining. Have a great rest of your summer! -->